Reflections on the Mediated Body

In our readings in this class, we have been exploring concepts of bodies and presence in virtual spaces. Our assignments have explicitly asked us to consider the feelings evoked by the different ways we have learned to represent and experience our bodies online. One of the readings’ relevance to our work––THE EXPERIENCE OF THE BODY AND CLASSICAL PSYCHOLOGY––didn’t fully sink in until I attempted to build my final project. Briefly, the reading explores the paradox of being able to experience ourselves in the world:

My visual body is certainly an object when we consider the parts further away from my head, but as we approach the eyes it separates itself from objects and sets up among them a quasi-space to which they have no access. And when I wish to fill this void by resorting to the mirror’s image, it again refers me back to an original of the body that is not out there among things, but on my side, prior to every act of seeing.

Maurice Merleau-Ponty

Although it never once mentions the internet or webcams or even computers, the philosophical questions around how we see ourselves could not be more relevant to the technologies we have been practicing with and building in this class.

FUN FACT: It’s impossible to see with your own eyes whether or not your nose is ever the highest point on your body at any given moment.

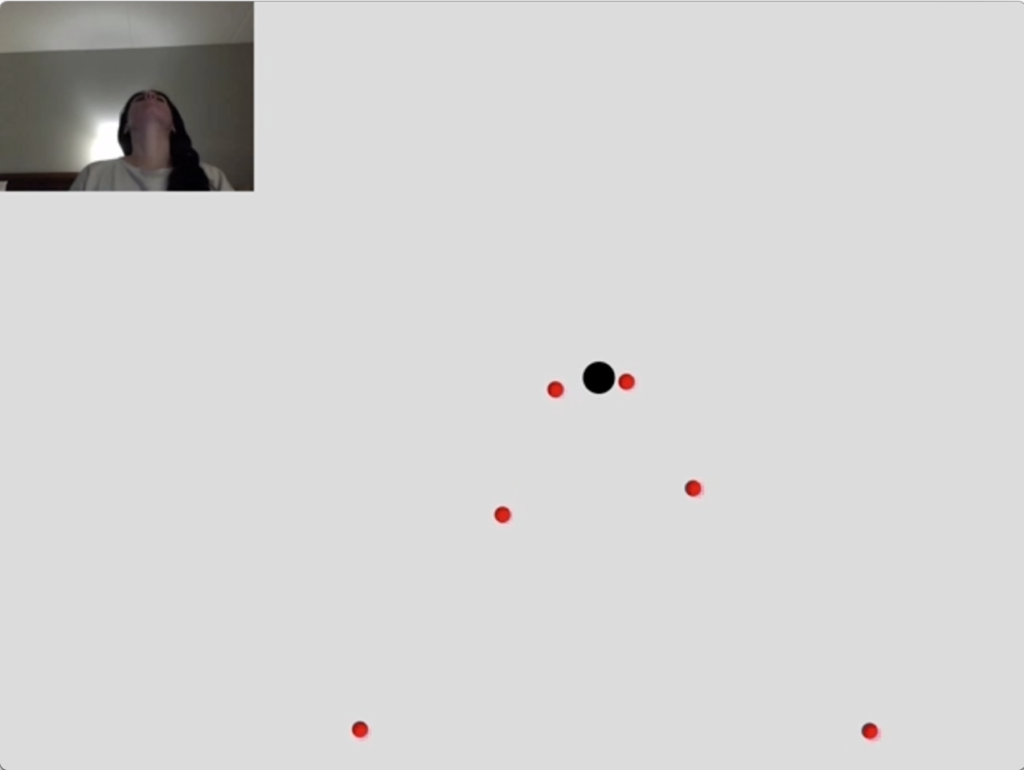

The example above illustrates what I mean. The program is Lisa’s example from her Posenet demo: the red points represent my eyes, ears, nose, and shoulders. The black point represents the highest point among the tracked body parts. In playing with it, I realized I could never actually see for myself whenever my nose was technically the highest point in my body. I had to take a screen recording to see that exact moment. Regardless of whether or not I could see it happening, it happened. I technically experienced it in my own body in the moment it happened, but I didn’t really feel it until I could see it happen in the abstract.

This raises interesting questions: Why does the moment my nose is the highest point on my body only feel real when I experience it secondhand? Put another way: What can the mediated experience of our bodies add to the lived experience of our bodies?

Mediated bodies did not begin with the internet or even television. Dancers, choreographers, and spiritual and religious practitioners have been attempting to create new ways of experiencing and understanding bodies and their relation to each other, the world, and the gods since… well forever.

The incredibly prescribed baroque dances of Louis XIV’s court literally created the reality of each member’s rank and standing.

In the yoruba/lucumí tradition, the orisha become alive within those who dance their dances and devote themselves to their rites.

Men in early 20th century Buenos Aires could dance the tango together and express their affection within a socially acceptable public venue.

Even a mirror inverts our body so that we can never actually see ourselves as we are, so to speak.

These examples show how embodied interaction and performance are more than an expressive medium. They are a technology, a tool, for bending reality, the way we perceive it, and the way we experience it. A dance hall is a mediated place as much as a p5 sketch and a p5 sketch has the potential to have as much social and bodily impact as a dance hall.

Ways of Experiencing the Mediated Body in the Browser

In this class we concerned ourselves with ways of experiencing the body in the browser. In order to be able to draw, manipulate, and share our bodies on the screen, we had to conceive of our own bodies within various levels of abstraction:

- Skeletons, made up of points on the body

- Pixels, made up of red, green, blue, and transparency values

- A static image that is redrawn 60 times a second, made up of pixels from a live video stream

Again, I feel it necessary to keep in mind that, like all data, the data our bodies has been converted into here is not objective. These data points have been conceived of and measured by other people. And even they were influenced by cultural ways of understanding and representing the body.

The farther down the rabbit hole of abstraction we go, the more discreetly we must segment our bodies, the longer our code gets, and the more complex our understanding of bodies in space, both real and cyber, becomes. It begs the question: how do those dancers do it? How do we bridge the gap between sensing and doing?

For dancers, the answer to this question is years and years of training in order to build the pathways in the brain and in their muscles. This is a process I understand. However, I struggle with constructing those pathways within my code. I’m still working out how to bridge the gap between sensing and doing within the browser.

When I run into problems coding, I like to take a step back and make sure I understand how to do everything I’m trying to do. I’ll break into actionable chunks and write out the code in isolation.

As of today, I can…

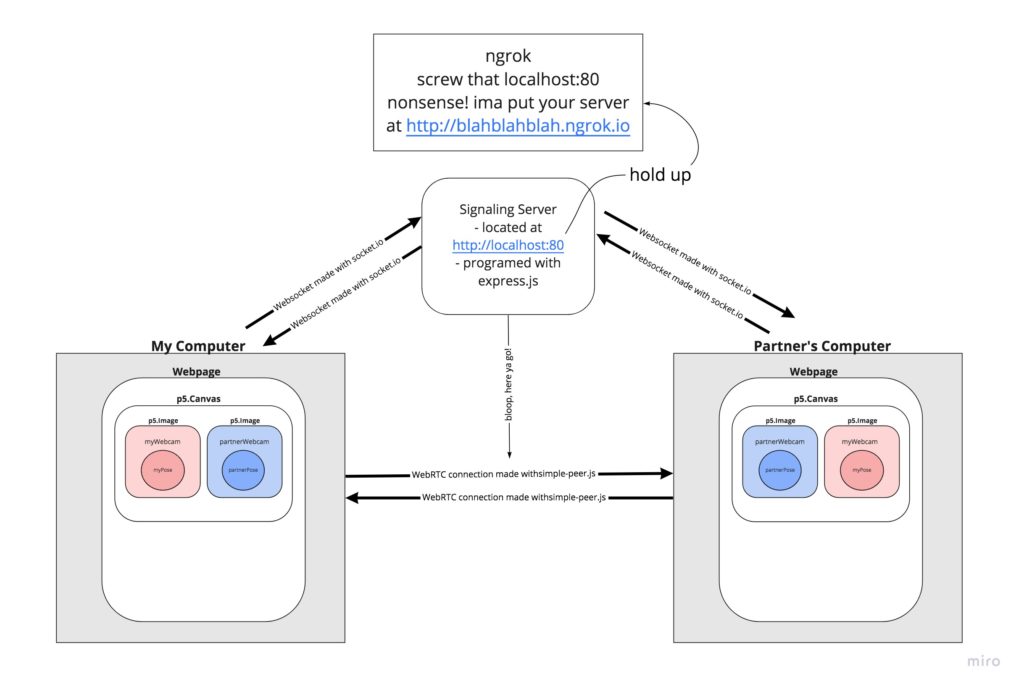

- Make a peer-to-peer connection (WebRTC + simple-peer.js) using a signaling server (express.io operating on a local host)

- Make a video chat app using p5

- Send and evaluate PoseNet poses over a peer connection

- Track and compare the positions of keypoints in the poses in relation to the canvas

- Measure the distance between keypoints on the same pose and between two poses at any given moment

- Manipulate p5 objects on the canvas based the positions of keypoints in relation to each other and the canvas

As of today, I cannot…

- Send a single data object over the peer connection that includes the webcam drawn to a p5 image + the current pose from PoseNet

- Reliably get skeletons from PoseNet

Let’s look at latter issue first and I’ll describe how it inspired my final project idea.

Issues with PoseNet

The more I play with PoseNet, the more I realize how unreliable it is. Specifically, I had to refresh multiple times for the skeleton array to show anything in it, both when sitting and standing in front of my laptop webcam––even when my keypoints were showing up. I can’t seem to isolate the issue or bug (if there is one).

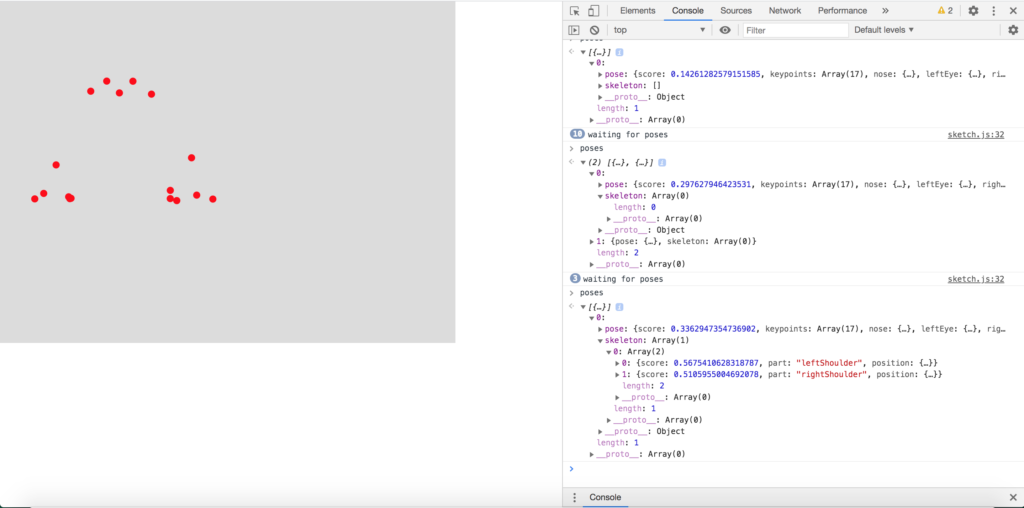

This is a screenshot of my console in Chrome while running the PoseNet example in Lisa’s demo from Class 3. On the right under poses[0].skeleton[0] only the most recent pose––gotten while I was sitting in front of my computer––has any data in its skeleton array. The previous pose which you can see above waiting for peers––gotten while I was standing with all keypoints in view––has no skeleton data. Even when I don’t change positions drastically while I remain sitting, the skeleton array sometimes will go empty.

I assume this is because PoseNet is not confident enough that it is detecting the keypoints it thinks it may be detecting and so won’t register it as a skeleton. Perhaps this could be fixed with a hardware adjustment: using a webcam that will pick up my full body image more clearly than my laptop’s built-in webcam.

Regardless, not being able to see my body reflected back at me in the way I expect is annoying and, perhaps more notably, disorienting. It’s very interesting to me that I get the same feeling of discomfort at not seeing my skeleton data in the console that I feel when I see my keypoints in the canvas in places my joints are not in the real world.

When I realized this, I wanted to explore that discomfort and disorientation. On reflection, it was the strongest feeling I felt while interacting with our in class examples. Perhaps by living in this discomfort for a while, I could reveal more insights into how to bridge the sensing-doing gap.

Final Project Concept

In order to make the explorations of disorientation described above interactive, I wanted to make a webchat app that had hidden rules that both induced disorientation and subverted the expectations we have of this type of audio/video interaction.

Here are some of the rules I imagined:

- If the users noses touched, the webcam images would move to random positions on the page.

- If your right hand became the top most point on your body, a funny jpg would be drawn on top it for the rest of the chat.

- If one user’s hand touched the other user’s nose (i.e., gave them a “boop”), then the latter user’s frame rate would slow and their image would start to trail.

This webchat app would have transmitted the webcam streams of two users over a peer-to-peer channel with a PoseNet pose underlying the RGB video stream. Here is an outline of what that architecture would look like.

Drawing the webcam streams on p5 images on a canvas would not only allow me to manipulate the webcam images, it would allow me to send and receive the webcam images together with the PoseNet poses within one data object over the WebRTC peer connection.

My next challenge is to create a data object that combines the webcam data and the PoseNet data that can be sent back and forth between the peer connections. Stay tuned for the follow up.

Leave a Reply

You must be logged in to post a comment.